Your cart is currently empty!

Category: Blog

-

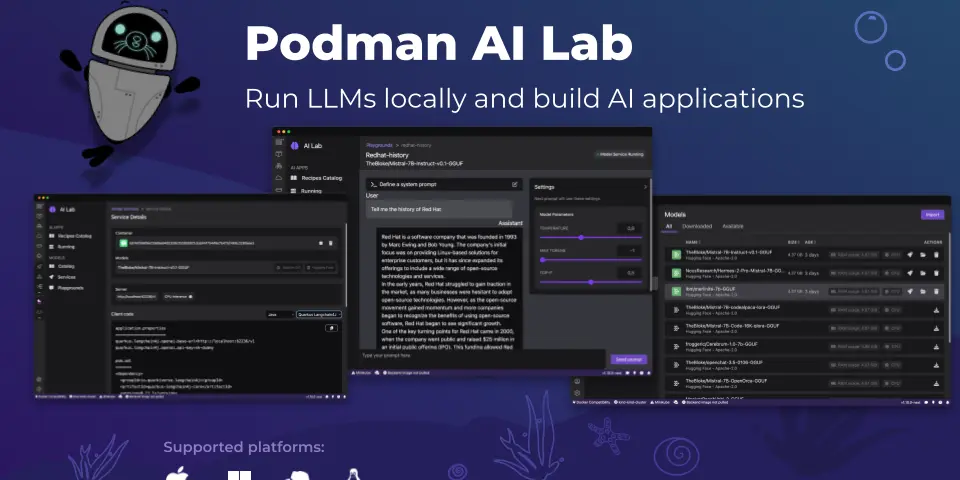

Podman AI Lab – Containerized Tools for Working on LLMs

Linux vendor Red Hat has released an extension for the Podman Desktop container management utility, called Podman AI Lab, that makes it simple to build and deploy generative AI applications in containers, on your local development machine. The extension includes a GUI for getting started, sample applications, a catalog of models, and a local model…

-

Nvidia VILA – Visual Language Intelligence for the Edge

Nvidia has released a new open-source visual language model called VILA, which can perform visual reasoning via a combination of text and images. The model, checkpoints, and training data are all fully open source and available on GitHub. There are 3B, 8B, 13B, and 40B versions of the model, with increasing accuracy and improved responses…

-

Reka Announces Reka Core LLM

Reka is an AI startup working on building large language models and multimodal language models. They recently released their largest model thus far, called Reka Core. Reka claims that it is comparable to OpenAI, Google, and Anthropic performance and accuracy, and that Core can run on-device, on a server, or in the cloud via API…

-

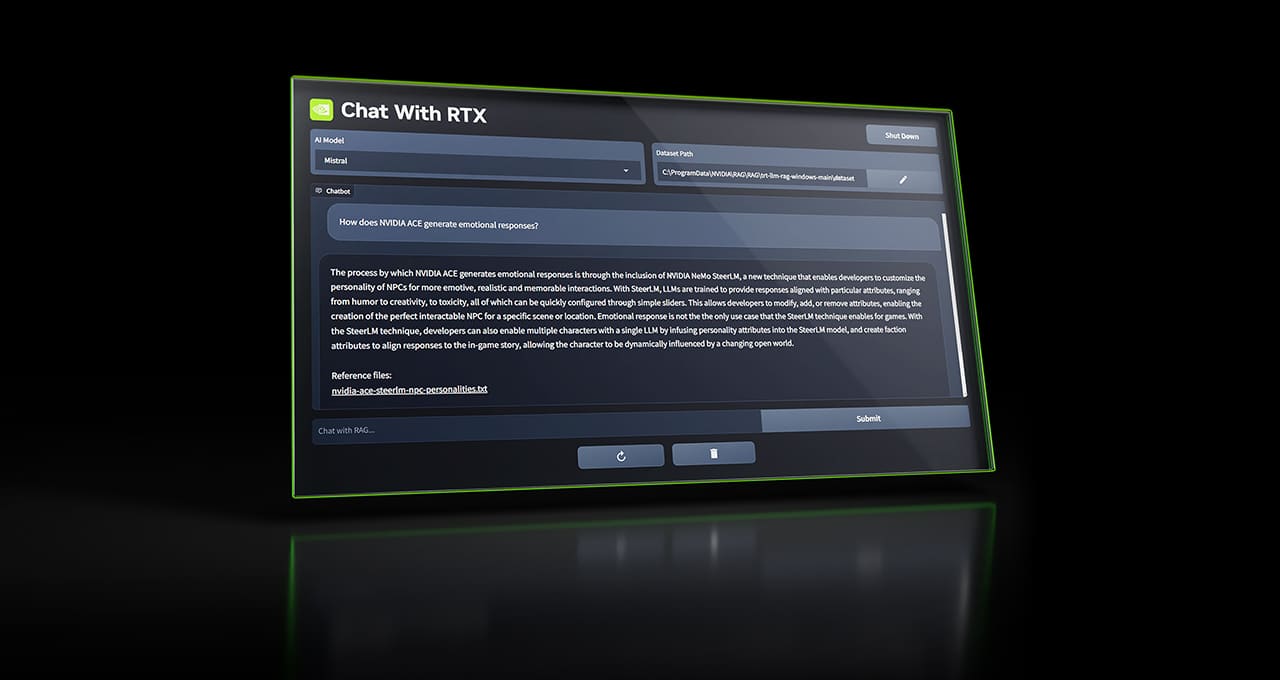

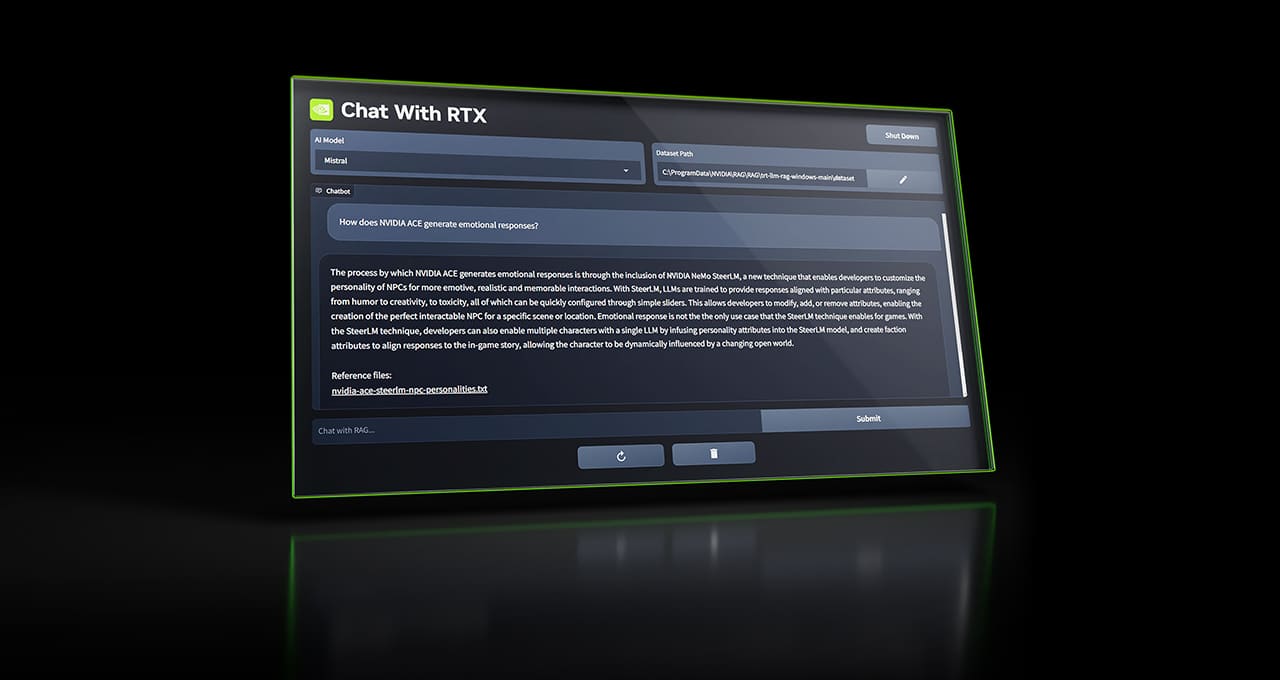

Nvidia ChatRTX Update: New Models

The most recent update to Nvidia’s ChatRTX application is out, which allows Windows users to chat with a local LLM for answers and information, as well as contextualize and distill knowledge from photos, documents, and other media on your local machine. And because the model and the artifacts are running locally, no information is sent…

-

Running the Microsoft Phi-3 Large Language Model

Microsoft released the first of several versions of their Phi 3 Large Language Model yesterday, and although this is what they consider to be a “small” model, it seems to be performing quite well! According to Microsoft, Phi-3 models outperform models of the same size and next size up across a variety of benchmarks that…

-

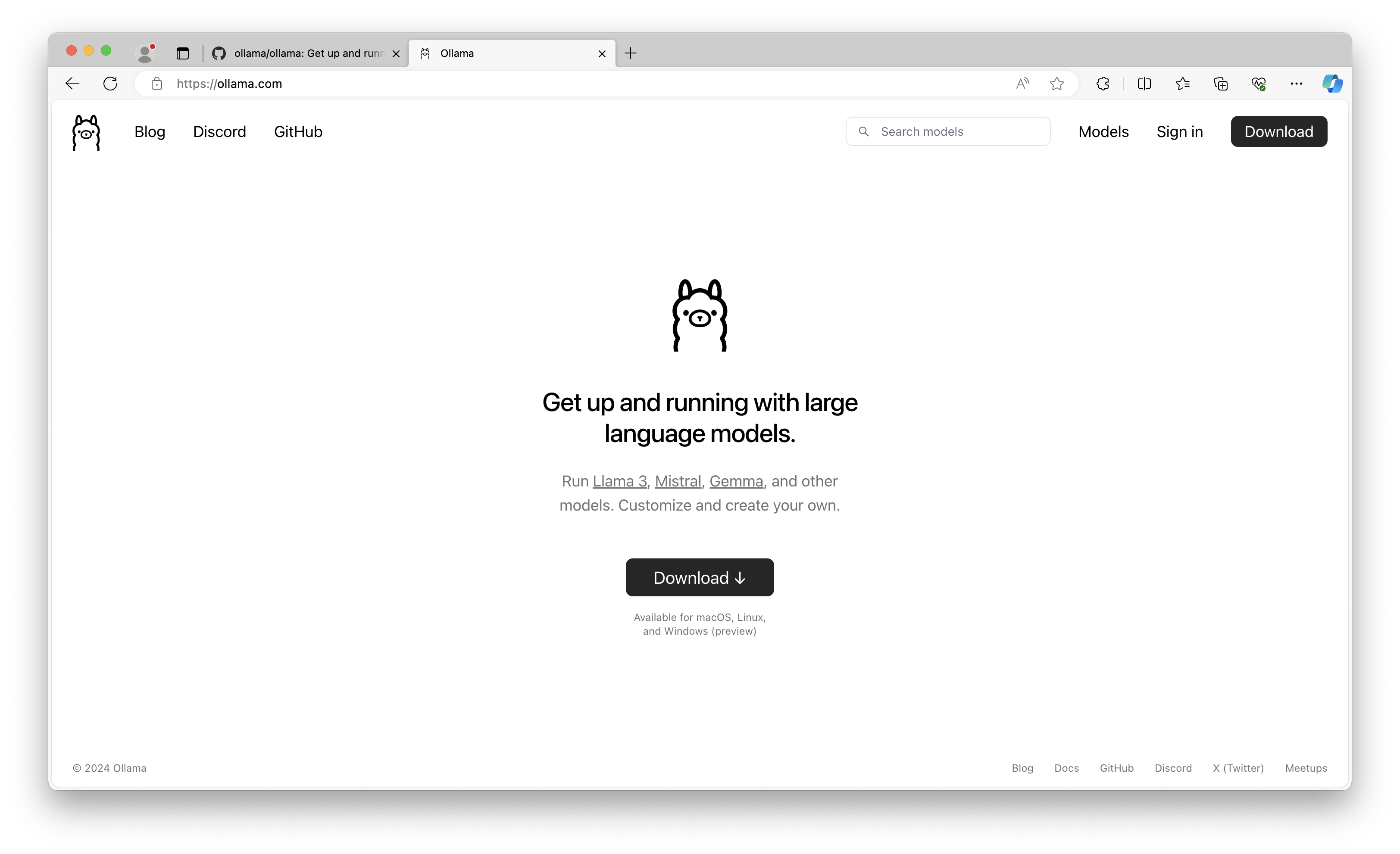

How to Run Llama-3 Locally on Your Computer, the Easy Way

Most users of LLMs such as ChatGPT or Microsoft Copilot either use a website or the built-in capabilities of Windows to interact with Large Language Models. In either case, the LLM is running in the cloud, and the user is sending data back and forth over the internet while interacting with the LLM, of course.…

-

Meta Releases Llama-3 LLM

Just announced this morning, Meta has released an 8B and 70B version of the Llama 3 large language model. There is also a 400B version still in training, but the 8B and 70B parameter models are available on Hugging Face. This state of the art LLM includes the following features, according to Ahmad Al-Dahle, VP…

-

Nvidia TensorRT Acceleration for Stable Diffusion

For Generative AI developers looking to run Stable Diffusion or Stable Diffusion XL locally on their own machines, Nvidia maintains a GitHub repository that houses an extension for the popular Automatic1111 Stable Diffusion Gradio web application. Once you have Stable Diffusion up and running from the Automatic1111 repository, the TensorRT installation is as simple as…

-

Getting Started with Local LLMs the Easy Way – ChatRTX for Nvidia GPUs

While most customers using the Nvidia GenAI Developer Kit will likely have existing machine learning pipelines and workflows, newcomers and those getting started with local large language model development might find it convenient to test out and play around with Nvidia’s own sample application, called ChatRTX. ChatRTX makes it simple to run a local…

-

The Definitive Notes on Running LLM Training on AMD GPUs

For developers working on Large Language Model development, fine-tuning, and even inference using AMD Radeon or Instinct GPUs, there is a very thorough and well maintained collection of notes and instructions at https://llm-tracker.info/howto/AMD-GPUs. Included are notes and instructions on ROCm installation, driver installation across a variety of operating systems, notes on different generations of hardware,…